The Image Recognition Files: Part 3

Welcome to the new and improved Shipspotter Bot 2.0

Highlights from today’s post:

Once more into the breach of image classification

New techniques, new webcams, new location for Shipspotter Bot

Better models, Mastodon, more warships, and less noise

Hi everyone, it’s been a while! There’s been a lot going on in my personal and professional life recently, some of which I am excited to share with you next week. Aside from all that though, I’ve been sinking1 plenty of time into revising Shipspotter Bot - my Twitter bot that scans port webcams for naval vessels entering and leaving port. In case you want to catch up on the first two parts of this intermittent series, you can read them below:

To briefly recap: last year, I wrote three Google Cloud Functions that ingest cached images from three webcams in Western Europe, load image classification models from a Google Cloud Bucket, use the models to decide if the image contains a warship or not, and, if so, tweet it out. Here’s a schematic of the process:

But I think I can do better. Among the issues faced by the V1 of the bot:

The bot itself only covered three locations, two in Germany and one in Greenland. Not exactly hotspots of naval activity.

Relying on cached images meant I missed any warships that sail by between the image being refreshed.

The models themselves were fairly inaccurate and did not follow machine learning best practices.

The code I wrote for the Google Cloud Functions looked like, to put it mildly, the ravings of a madman. It had no modularity, was incredibly inefficient and horribly formatted, imported several unused packages, ran certain sections twice, and required the entire function to be run even if no warships were detected (which happened fairly early in the execution of the code). Insanity.2

First things first, I had to get the code right. I stripped out unused packages and defined other functions that would be run only if certain conditions were met. That way, I would run the bare minimum of code at each execution, which keeps execution time, and therefore costs, to a minimum3.

I also added support for YouTube-based webcams, which I’m extremely excited about. I don’t want to get into the technical details of exactly what that code looks like, in case Big Google comes after me, but suffice it to to say that VidGear rules.

Adding support for YouTube webcams allowed for two major improvements. First, I could finally switch from evaluating cached images of the Kiel webcam to evaluating images from a true live stream. Daily predictions from Kiel doubled, since previously I was limited by the webcam image cache refresh rate (once every two minutes) and now I’m only limited by the code execution time and the amount I want to spend on the bot ($2-$3/month). I’m currently running predictions from Kiel once every minute but could theoretically get predictions every five to ten seconds or so.

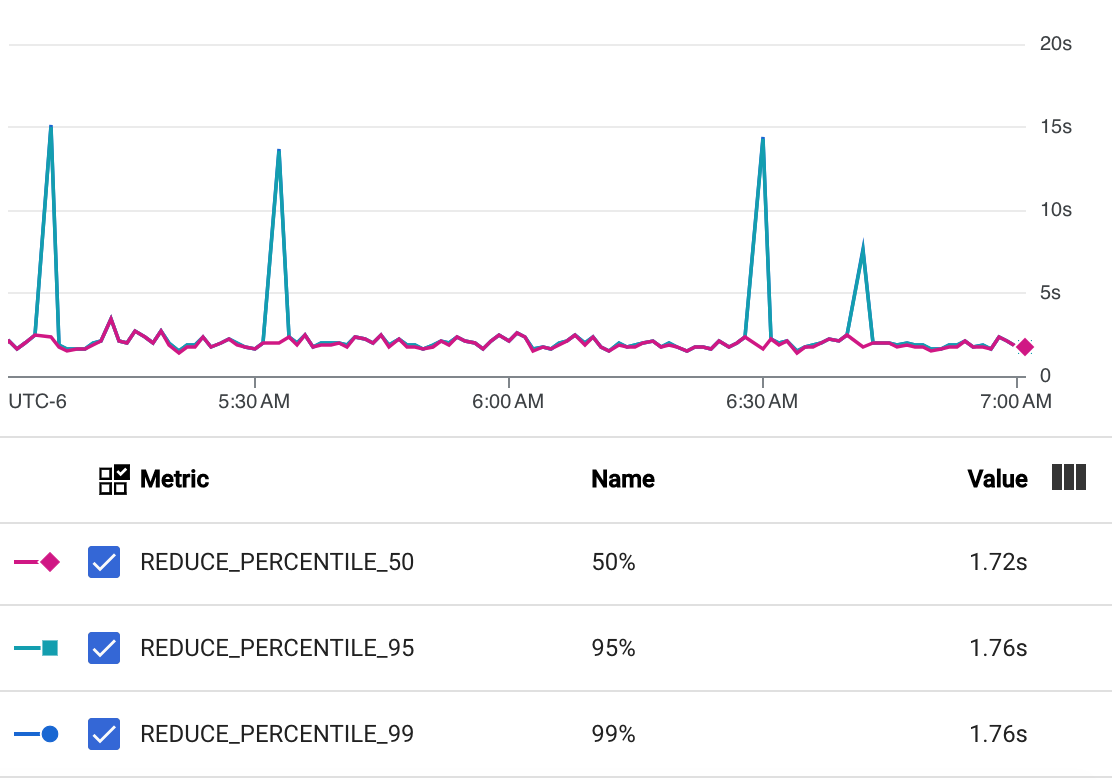

Revising the function and switching to a YouTube webcam also dropped the average execution time of the Kiel function from nearly 7.5 seconds to 1.7 seconds. Since you essentially pay for execution time in a Cloud function, shaving 5.8 seconds off each execution results in massive cost savings.

The second major improvement is that YouTube allowed me4 to slay the white whale of port webcams: San Diego.

San Diego hosts a massive naval base, which numerous destroyers, frigates, submarines, and other vessels arrive at and depart from every day. You can follow along with the webcams — and the naval traffic — here.

I collected a ton of training images from both the webcam itself and from WarshipCam’s previous ship IDs (again, many, many thanks to him for graciously allowing me to use all his hard work in building this bot). Using those images, I built a brand new, San Diego-focused model and Cloud function. It’ll identify pretty much any naval vessel of any type coming into or out of San Diego with a relatively high accuracy level. Here are a few examples:

Look how small, unique, and/or far away some of those ships and subs are! So cool!

Aside from YouTube support, I also revised and retrained all four models. They hadn’t been retrained since I built them around this time last year, so I wanted to ensure they incorporated the most recent year’s worth of training data. I also made some minor technical tweaks to the models themselves, including standardizing input image shape, training the models again with a much lower learning rate, and applying significant image augmentation to the training inputs.

Lastly, I got kind of freaked out with all of Elon Musk’s proposed Twitter API changes. Thankfully, my Chicken Little-ing didn’t quite come to pass:

But because of all that concern, I did add a Shipspotter Bot account on Mastodon. It should be an exact, 1:1 replica of the bot’s Twitter predictions, albeit hosted on a Mastodon server (botsin.space, to be exact). So if you’re more of a Mastodon person, go ahead and follow it over there!

Overall, I’m pretty proud of this revision. The bot still obviously tweets incorrect predictions. Remember, the bot makes over 2,200 predictions per day across all four locations. Even with incredibly accurate models (which I would say mine are not), a few incorrect predictions will squeak through. If you hate seeing occasionally irrelevant or noisy posts in your Twitter/Mastodon feeds, then maybe don’t follow the bot.

But if you want to see cool stuff like a Danish patrol boat preparing for an icy North Atlantic mission or a German corvette returning from a sortie into the Baltic every so often, then boy do I have the bot for you.

Pun extremely intended.

Zen of Python? More like Fever Dream of Python.

With the collaboration and encouragement of the good folks that run the San Diego port webcam network and WarshipCam, a popular shipspotting Twitter account.

Very nice progress!

I think you didn't mention the use of AI for the code.

Did you use e.g. ChatGPT for the code improvements or are you planning to do so?