I’m currently working on a longer piece about Ethiopia’s Tigray conflict, but in the meantime, I thought I’d share a shorter look at how a little bit of Python (mostly cobbled together from Github and my very basic understanding of coding) can not only save a ton of time, but also build useful resources for open source analysts, academics, journalists, and activists.

In the course of researching my piece, open-source guru Giancarlo Fiorella pointed me toward the Europe External Programme with Africa - an NGO doing invaluable reporting and documentation work on conflicts and refugee policy in Africa. Giancarlo mentioned how EEPA’s three-times-a-week situation reports are the gold standard in English reporting on the Tigray conflict. The only problem is that they are perhaps too well organized.

The situation reports are published in individual PDF files on EEPA’s website. The conflict in the Tigray region has been ongoing since last November, which means there have been nearly 190 situation reports since the beginning of the conflict. While each report is chock-full of information, it’d be a huge time suck to open each of the 180+ files individually, extract the relevant information, document it, save it, and move on. There had to be a better way.

That’s a lot of situation reports.

So, with EEPA’s permission, I scraped all of those 187 PDF files into a single, searchable, chronological file (in a variety of formats) that essentially provides an unbroken history of the Tigray conflict from November 2020 until July 2021.

The files are hosted on the Internet Archive, which you can find here, although do keep in mind that all credit for the situation reports belongs to EEPA. If you or a colleague would like to use it, please feel free! I’ll also update and re-upload them periodically as new situation reports are added.

Scraping the files was also a lot easier than I expected. There were basically four steps:

Crawl the EEPA site that hosts the situation reports, scrape them, and download them all to a folder on my computer.

Use Tika and a nifty tool I found on Github (thank you nadya-p!) to loop through all those PDF files now on my computer, extract the text from them, and save them as .txt files.

Merge all the .txt files into one file, sorted chronologically (the chronological part was actually somewhat difficult and required the numericalSort function in this snippet).

Repeat the merge and sort, but this time with the .pdf files.

And that’s basically it! At the end of it all, I had 182 .pdf files of situation reports, 182 .txt files of situation reports, one merged .pdf file, and one merged .txt file on my computer. I deleted the now-unneeded 364 situation report files and examined the merged files.

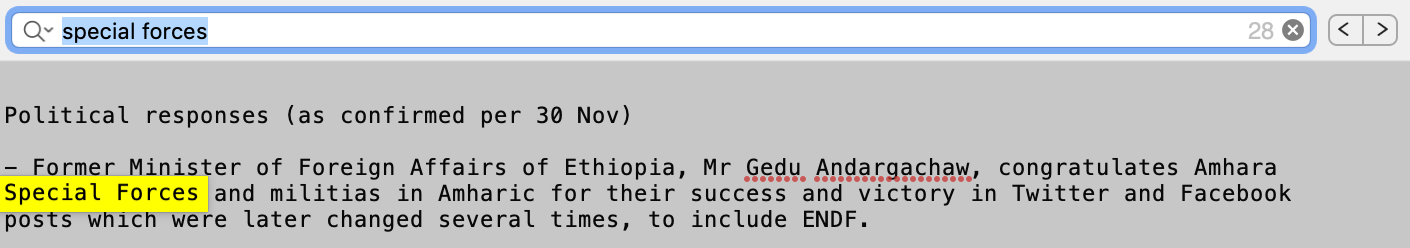

If a researcher wanted to find the first mention of “special forces” in the situation reports, they would have to wade through the first 12 reports before finding anything. In the merged document, “special forces” are a quick control+f away:

If a reporter wanted to search thematically about aid organizations on the ground in the Tigray region, it’s a piece of cake. Searching in the individual situation report files would have required her to open virtually every document to find what she was looking for.

Those are just two examples, but I’m sure there are other interesting angles people can approach the documents from. An n-gram analysis of key terminology? Perhaps a histogram of all explicit locations mentioned in the situation reports? A Google Earth time-lapse map of the locations? The options are truly endless.

Hopefully this has shown how just a few snippets of code can save hours (and probably days) of work. If you have a few hundred .pdfs or .txts you want merged, feel free to get in touch on Twitter or at lineofactualcontrol [at] protonmail [dot] com - no promises, but I’ll do my best to put them all together. Again, feel free to check out and use the merged files if you’d like and keep an eye out for my next Tigray piece in a few weeks.

That's a great example of web scraping used for good. Thanks for sharing